Overview of Data Brokering with Node.js

Node.js is a top tool for processing large amounts of data. With Node, you can share data between services without tightly coupling applications together or rewriting them. For example, wanting to use data from a legacy API in a modern frontend application. Data brokering saves us from having to rewrite the underlying systems or make them directly aware of each other. Processing data with Node.js is done in many ways including:

- A thin proxy API (back end for front end - BFF)

- An Extract, Transform, Load (ETL) pipeline

- Message queues

These processes sit between the different systems we need to connect and enable us to take data from one system and use it in another. In this Node online tutorial series, we'll expand on these concepts and teach how to build examples of each of these data brokering processes using Node.js.

In this tutorial, we'll:

- Define data brokering

- Identify different techniques for brokering data

- Identify the problems that data brokering solves

By the end of this tutorial, you should understand what processing data with Node.js looks like, and how it helps connect systems.

Goal

Understand what data brokering is and different approaches to implementing it in Node.js.

Prerequisites

- None

Watch: What is data brokering?

The problem

Technology itself moves fast. But the systems we use in our applications often can't change, or don't change nearly as rapidly as our applications. Today we are using more data than ever before, from a variety of different sources, some of which we control and some we don't. As business needs change, we don't always know what the requirements of a particular system will be tomorrow.

Often we have data locked away in a legacy API, third party system, or otherwise siloed off somewhere. Then one day we need to access that data from a new frontend application, or anywhere else in our stack where we weren't accessing that data before. This introduces the challenge of: how do we share data across our applications, without tightly coupling our applications together or rewriting them?

It's a familiar problem; the business' needs change, and relationships between systems need to adapt. For example, maybe you need data from your accounting system to help understand which items in your inventory system aren't selling well, so you can figure out which items to put on sale. Let's assume the two systems were built at different times with different goals in mind. The two systems are both core to your business, and making drastic changes in either one isn't a risk your company is willing to take. You still need to work with that data, but want to limit any changes that need to be made to the two systems.

If we need to make two systems talk to each other, where do we make the change?

Data brokering

To access your data wherever it is and get it to go wherever it needs to go, you can build something which sits between applications and facilitates the transfer of data for you. This "glue" piece of software can be focused entirely on talking to the specific systems it is concerned with, and nothing more. This approach can be implemented with varying degrees of coupling between the applications.

You can call this approach data brokering: moving data between disparate systems. The goal of data brokering is to expose data from one system to another, without those systems having to know anything about each other. This is generally useful in the applications we build, separating concerns between systems and keeping coupling low. But data brokering is especially useful in scenarios where we don't control our sources of data, or aren't able to modify them. That's a common restriction when working with legacy APIs or third party services.

Ultimately, data brokering helps us create decoupled applications and services. They're decoupled in the sense that each piece is not heavily dependent on the others. We can make changes in one part of the system without breaking other parts.

Why Node.js?

With the rise of Node.js and its ever-expanding ecosystem, we can build the intermediary apps that will ensure that we are able to access data from wherever it is needed, in the format we need it.

Node.js benefits from the ecosystem of NPM packages for interacting with different data sources. You can quickly start connecting to almost any type of data source using packages available from NPM. This speeds up development because you don't have to write much low-level code for connecting to a data source. Find an NPM package which helps you connect to the type of data source you're using (HTTP API, websocket, MYSQL database, etc.) and you're ready to start writing code.

The event-driven design of Node.js also makes it a good choice for applications which need to sit and wait for interaction. Node.js consumes very few resources and can handle a large number of concurrent connections on a single thread. This is useful for data brokering applications because they often sit in the background waiting for interaction to occur. This event-driven model also works quite well in the world of serverless applications. In a serverless context, you're only paying for the time your code actually runs. Instead of maintaining additional servers in your stack, your Node.js code could run on a serverless platform and only run when there is data to process.

Node.js also has a low learning curve, especially if you're familiar with JavaScript. If a developer is already working in JavaScript on the frontend, they can apply a lot of that knowledge to building applications with Node.js. This can effectively increase the number of backend developers on your team, and promote a full-stack experience for your developers. This remains a very attractive feature of Node.js. Developers can be productive both on the frontend and backend because they both have JavaScript in common.

Lastly, Node.js makes quick iteration possible. This is partly because of the NPM ecosystem we already mentioned and the vast number of tools available within it. If you effectively utilize this robust ecosystem, it is possible to get a Node.js project up and running without needing to write the application from the ground up. Furthermore, by using tools available on NPM, we can reduce the overall amount of code that needs to be written and reduce the time-to-market cycle inherent in building software.

Examples of data brokering

That all sounds good, but what can this look like in practice?

We can achieve this through many methods, but 3 that we will focus on are:

- Thin proxy API

- Extract, Transform, and Load (ETL) Pipeline

- Message queue

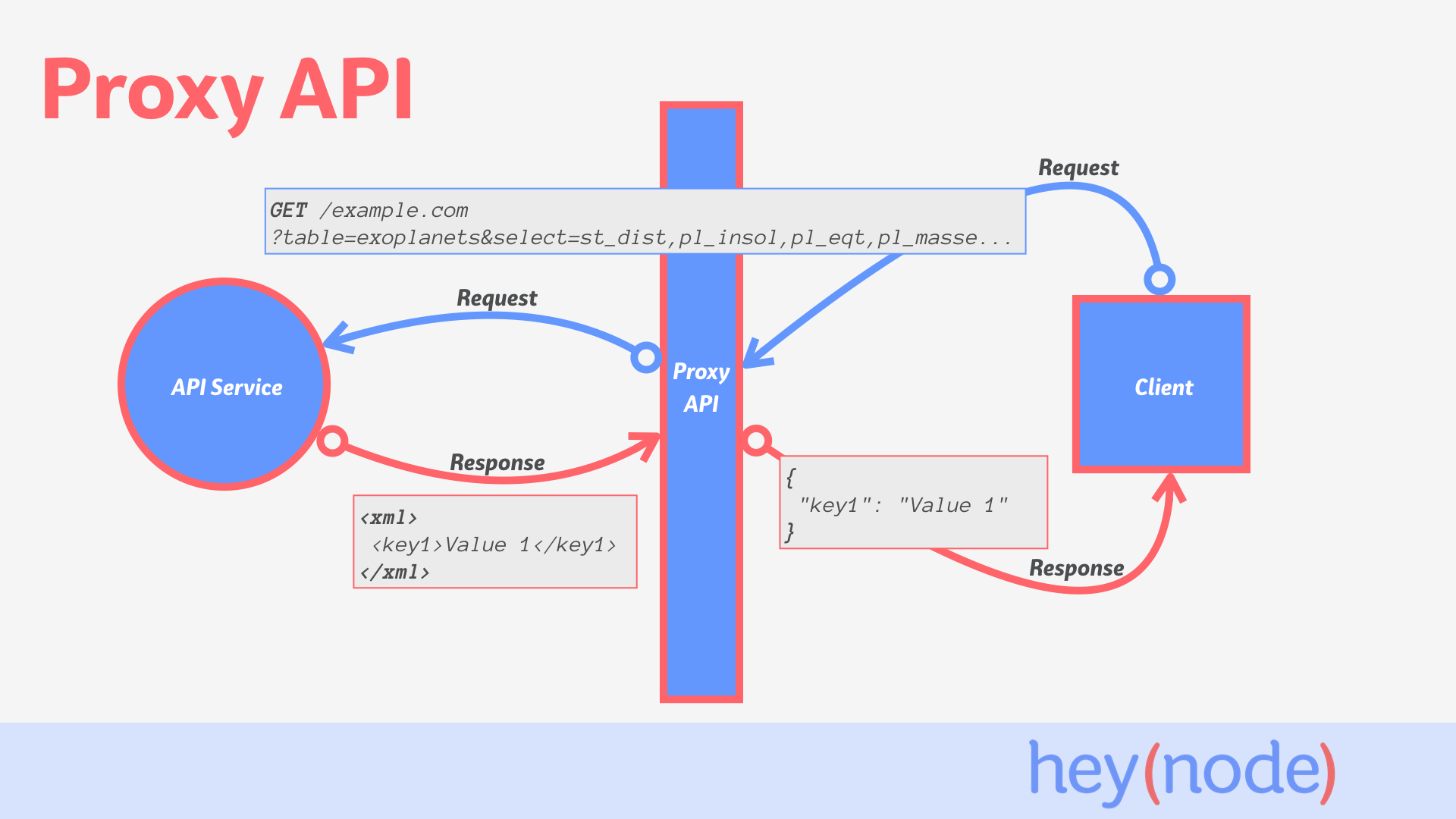

Proxy API

A proxy API is a thin API server which translates requests and responses between another API and an API consumer. "Thin" here means that this API server is a lightweight solution that does a single job.

In this approach to data brokering, the proxy API serves as a friendly interface for a consumer, who doesn't need to know how the requests to the underlying service are actually fulfilled. In this example, a consumer is any client or application which wants to request data, and a service is the underlying source of the data that the proxy API communicates with to fulfill the request.

Proxy APIs are great when your objective is to serve data from a known service in a different format without knowing anything about the consumer client. You know the original format the service uses, and the desired format for the consumer, but you don't know (or don't care) who the consumer is going to be.

A proxy API will only expose the data the consumer needs, in a format the consumer can easily work with. It translates between the consumer's request and what the underlying API expects requests to look like. It's essentially exposing a subset of the functionality and data available from the underlying API service.

For example, the publicly available NASA Exoplanet API expects requests with SQL queries passed through query strings in the URL, and returns data in JSON, CSV, or ASCII format. The keys in the JSON response all refer to the column names for the particular table they were pulled from. The keys are ugly, not quite human readable, sometimes duplicated data, and in general fairly confusing. Luckily they still provide a JSON format! Beyond the helpful JSON response format provided, the API interaction is not particularly standard, and certainly not very developer-friendly.

Imagine for a moment that you or your team need to work with this API for a new public frontend that you are building. Instead of having developers stuff SQL queries into URLs, and otherwise learn the ins and outs of this strange API interaction, you can build a lightweight API server that sits in front of the NASA API. The new API will expose only the data which the frontend needs, in the format it is needed, and consumers can interact with the new API in an easier-to-use format than passing SQL queries through a URL. You can even cache data at the new API, to reduce round trips to the NASA server and improve performance of your frontend application.

This is very useful when working with legacy APIs, APIs in a format like SOAP, or any situation where you need consumers to talk to an API which follows an unfamiliar pattern. The benefits are that you can expose a stable interface in a familiar form to other applications, while custom tailoring the request and response pattern of the proxy API to whatever standard the consumer requires. If the format of the underlying service changes at any time, you can make the appropriate changes in how the proxy API talks to the service, without having to break the API contract you are exposing to consumers.

You may have already done something similar before. If you've ever had to make requests from a server to an API that doesn't allow cross-origin requests from the browser, you've used this pattern.

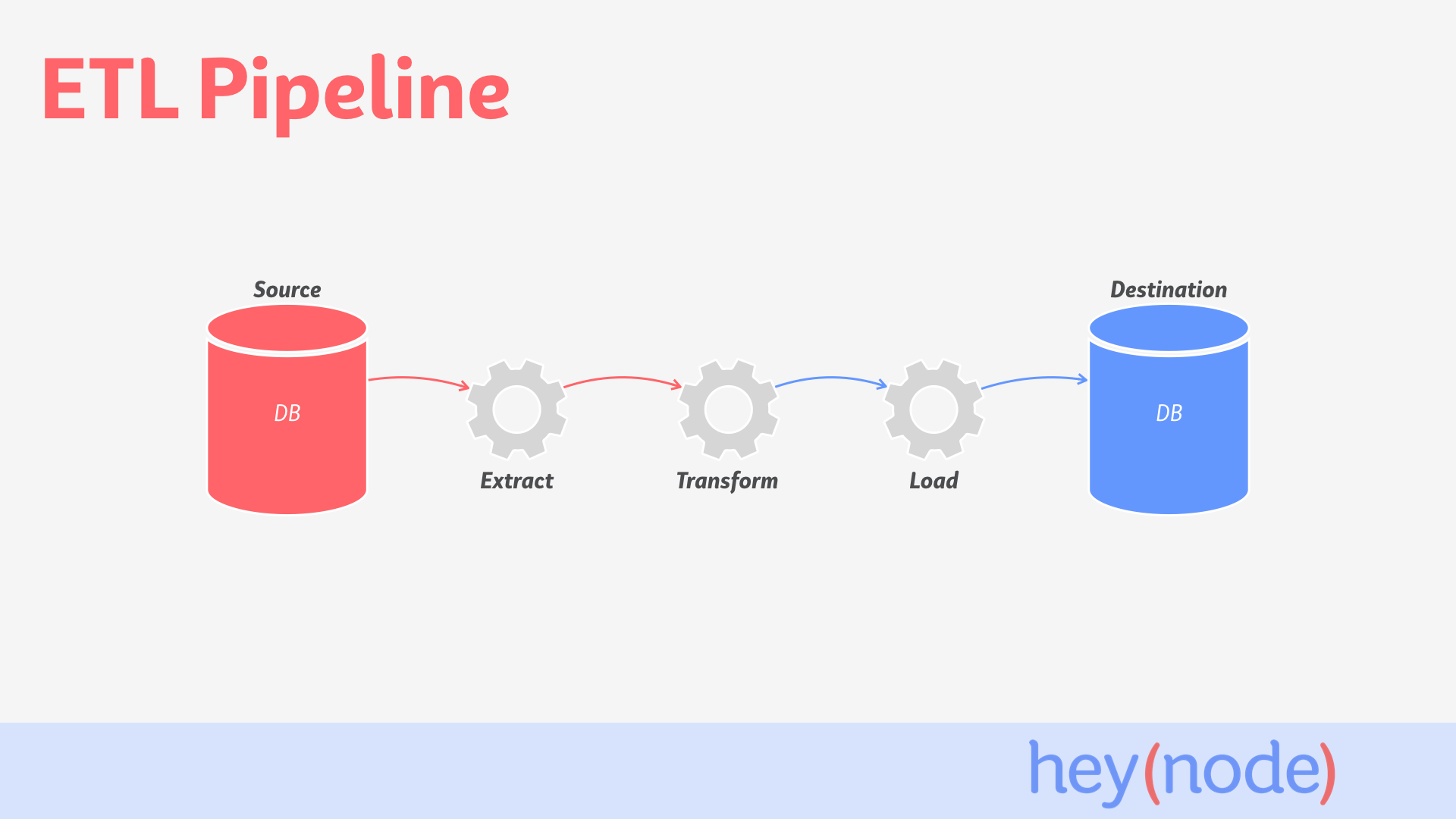

Extract, Transform, Load (ETL) Pipeline

Another approach to data brokering is using an ETL pipeline. Here, ETL stands for extract, transform, and load. This is a common approach in moving data from one location to another, while transforming the structure of the data before it is loaded into its destination.

ETL is a process with three separate steps. It's often called a "pipeline" because data moves through these three steps, from beginning to end.

- Extract the data from wherever it is, be that a database, an API, or any other source.

- Transform the data in some way. This could be restructuring the data, renaming keys in the data, removing invalid or unnecessary data points, computing new values, or any other type of data processing which converts what you have extracted into the format you need it to be.

- Load the data into its final destination. Whether that final destination is a database or simply a flat file, the location doesn't matter. You've just loaded it to wherever it needs to go, in the format you need it to be in.

ETL pipelines make the most sense when you need to move data from one known system to another known system, and need to optionally translate the content en route. The difference between a proxy API and an ETL pipeline is that both ends of the ETL pipeline are known. With a proxy API, you don't necessarily know where the data is going to end up, and you let the consumer make requests as needed.

Examples of an ETL pipeline could be moving large amounts of data at once, pulling reports, or migrating data into a new database or warehouse. Whenever you have data in one format but want to move it somewhere else in another format, an ETL pipeline is a powerful way to move that data in bulk. This approach is most appropriate for scenarios where you want the data to eventually rest in a new location. The ETL pipeline handles getting the data to that new location in the format you need it to be.

Typically, an ETL pipeline is run as a batch job. You move all the data you need at once, as opposed to an approach like a proxy API where you are exposing an interface for consumers to retrieve data on demand. This batching could happen once every 12 hours, every week, or as a one-off job that is run when needed.

This approach is especially useful for large amounts of static data. If you need to convert hundreds of gigabytes of data stored in flat files into a new format, or compute new data based on those hundreds of gigabytes, an ETL pipeline is a natural fit for processing the data and sending it somewhere else.

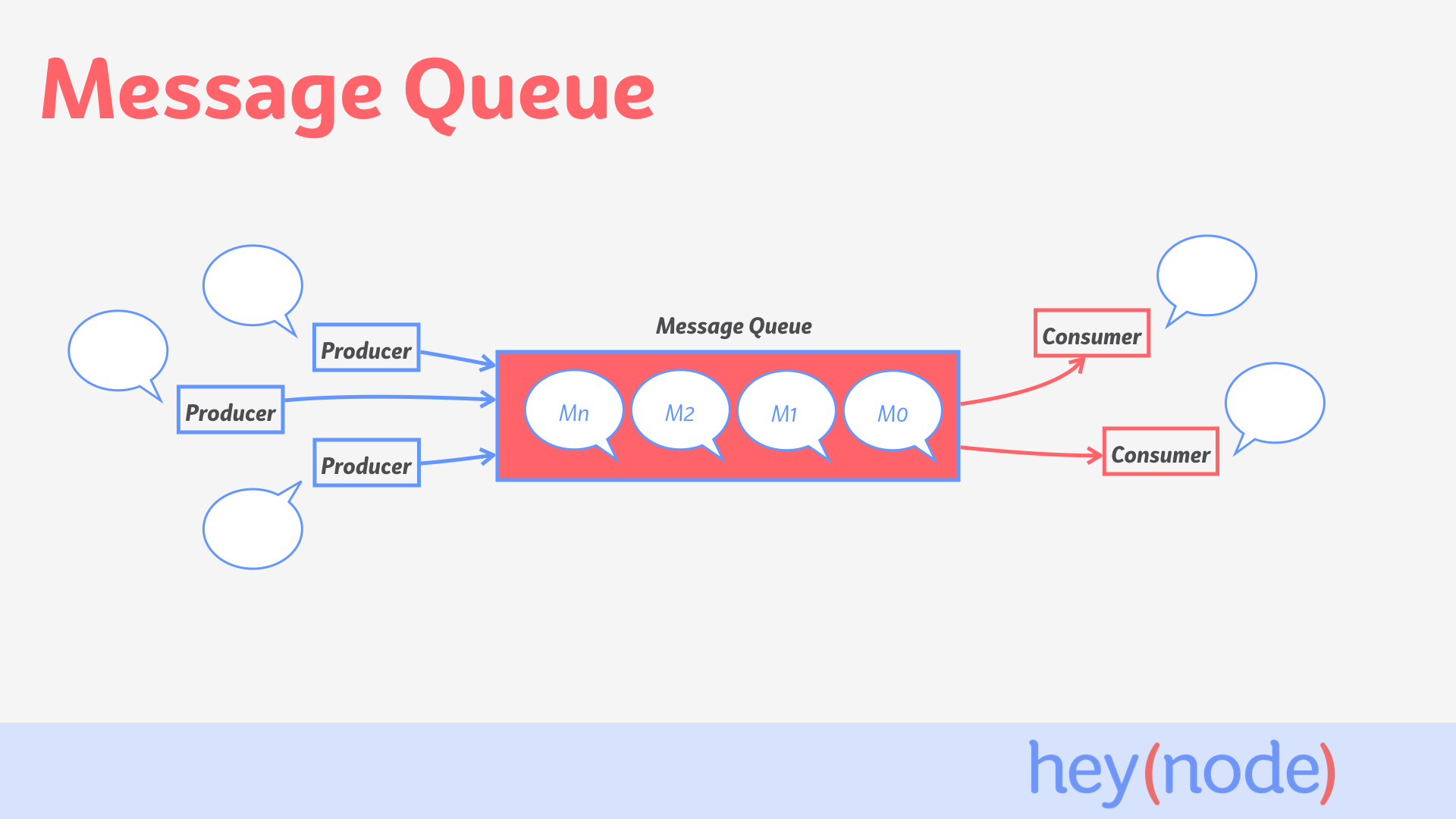

Message queue

Message queues are another powerful way to broker data between applications. A message queue stores "messages" in sequential order sent to it until a consumer is ready to retrieve the message from the queue. A message can be any piece of data, like a log event, a row from a database, or any piece of data which is being sent from one part of your application to another.

Message queues work with producers and consumers:

- Producer: sends messages to the queue

- Consumer: takes messages off the queue

This decouples the relationship between the producer and consumer. The producer can send messages to the queue and not worry about waiting to hear back if its message was processed or not. The message will sit in the queue in the order it was received until a consumer is ready to handle the message. This makes message queues an asynchronous communication protocol.

The producer and consumer don't need to be aware of each other, and the producer can continue adding messages to the queue even if the consumer is currently overwhelmed with load. The queue itself will persist the messages in the queue so none are lost if there isn't an available consumer, or if the rate at which messages are being added are more than the consumers can currently handle.

It's this persistence and asynchronous (async) communication which makes message queues straightforward to scale. Your applications can tolerate spikes in load, because if at any point messages are coming in faster than the consumers can handle, none of the messages are actually lost. They will sit in the queue in the order they were received until either more consumers can be spun up to handle the increased load, or a consumer becomes available to deal with the message. You can also have consumers process batches of messages instead of dealing with just one at a time, which also helps to absorb load in your system.

A common example would be delivering webhooks. For example, if you made a commit in GitHub, a producer would send a message to the queue that says a commit was made. Eventually a consumer will acknowledge that message, and based on the content, know that it needs to make a HTTP POST to a remote server. The consumer attempts to do so, but the remote server fails to respond. Instead of just giving up, the message is left in the queue for another attempt later. When a consumer encounters the message again, it tries to perform the HTTP POST a second time. Maybe this time it succeeds, and afterwards the message is removed from the queue. This introduces a high level of resiliency to the delivery of webhook notifications. Additionally, if at any time the number of messages in the queue gets really large, additional consumers can be added to help work through the backlog.

You can use message queues to solve many different problems when connecting parts of your applications in a decoupled way. But they really shine when dealing with high volumes of realtime events which occur in your system. This could be delivering webhooks like the example above, processing payments, or tracking page views in your application. Any scenario where two systems need to communicate and persistence, resiliency, or batching are highly important, a message queue could be the right solution.

Recap

Data brokering helps us connect different parts of our applications together while keeping them from directly relying on one another. Instead of completely rewriting applications to talk directly to each other, we can build something which sits between them. You can use data brokering techniques to connect systems that you control and ones that you don't.

Three powerful approaches are using a proxy API, an ETL Pipeline, or message queues.

- A proxy API sits between an underlying API and the consumer requesting data. The underlying API is known, but the consumer doesn't necessarily have to be known ahead of time.

- An ETL pipeline takes data from one source, processes it, and then loads it into its final destination. Both ends of an ETL pipeline should be known: you know how to access the source of the data, and you know where it is going to end up.

- A message queue allows multiple systems to communicate asynchronously, by sending messages to a persistent queue to then be processed whenever a consumer is ready. A queue doesn't need to know anything about the producer adding messages to the queue, or the consumer processing messages from the queue.

Further your understanding

- Explain in your own words what data brokering is used for.

- If you need to provide an interface for querying a database, which approach would you choose and why?

- What are the differences between an ETL pipeline and a message queue? What are the benefits of each?

- Can you think of problems you've encountered before that data brokering can help solve?

Additional resources

- NASA Exoplanet API (exoplanetarchive.ipac.caltech.edu)

- RabbitMQ Message queue software (rabbitmq.com)

Sign in with your Osio Labs account

to gain instant access to our entire library.